Comparing different Logging Solutions in Clojure

I log you 3000

Logging might not be flashy, but it’s the heartbeat of every reliable application. In this project, we pit Clojure's top logging contenders—pprint, print, clojure.tools.logging with Log4j, and Timbre—against each other in a no-holds-barred showdown.

One of the most crucial factors when evaluating logging solutions is execution time. For this, we use three input files of varying sizes to simulate different workloads:

benchmarks/

├── log_inputs_3000

├── log_inputs_10000

└── log_inputs_1000000Each file contains a specific number of lines: 3,000 for small workloads, 10,000 for medium workloads, and 1,000,000 for large workloads. The files are processed line by line, and each line is logged using one of four logging functions.

Here are the logging functions we use:

(defn log-line [line]

"Logs a single line using Log4j through clojure.tools.logging."

(l4j/info line))

(defn log-line [line]

"Logs a single line using pprint"

(pprint line))

(defn log-line [line]

"Logs a single line using println"

(println line))

(defn log-line [line]

"Logs a single line using Timbre"

(timbre/info line))To measure the execution time of these functions, we implement the following helper functions:

(defn read-and-log [logging-fn input-file]

(try

(with-open [rdr (-> input-file

io/file

io/input-stream

io/reader

java.io.BufferedReader.)]

(loop []

(when-let [line (.readLine rdr)]

(logging-fn line)

(recur)))

true)

(catch Exception _

false)))

(defn benchmark [f & args]

"Measures the execution time of a function. (nanoseconds)"

(let [start-time (System/nanoTime)]

(apply f args)

(let [end-time (System/nanoTime)

execution-time (- end-time start-time)]

{:exec-time execution-time})))

(defn ilogu3000 [lfn inputfile]

(let [result (benchmark read-and-log lfn inputfile)]

result))The ilogu3000 function takes a logging function and an input file name as arguments. It uses the benchmark function to measure the execution time of reading and logging all lines in the file, returning the execution time in nanoseconds. This setup provides a consistent way to evaluate the performance of each logging solution under different workloads.

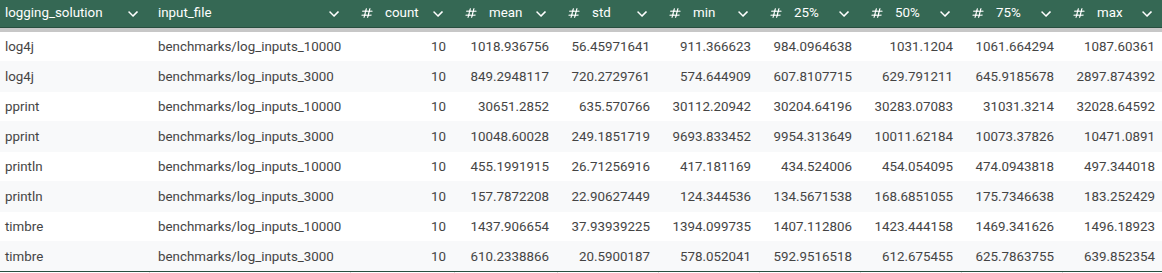

The files with 3,000 and 10,000 logs were run multiple times to obtain a better performance average. The file with 1000000 logs was run only once.

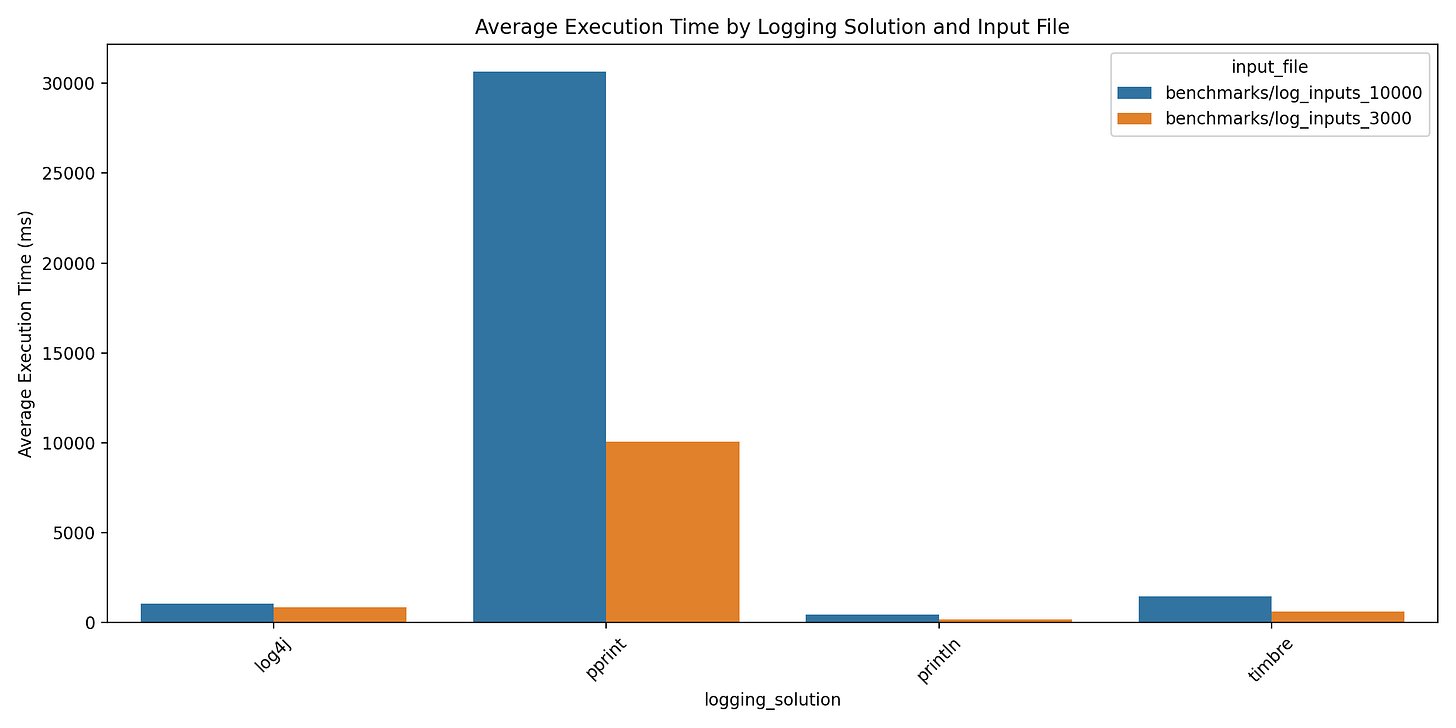

The following graphs show us the performance of our functions against the different inputs are given:

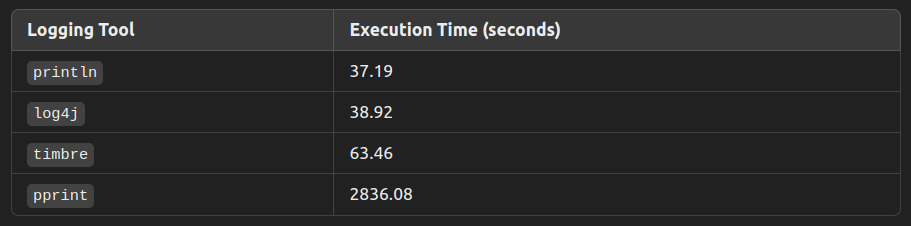

printlnis the most efficient, providing the fastest logging times across all file sizes, including 1,000,000 logs.log4jandtimbreare suitable for more feature-rich logging but incur higher execution times, especially for larger datasets.pprintis the least efficient for handling large numbers of log entries due to its high execution times. It should be used sparingly for smaller log volumes or where detailed formatting is necessary.

Based on the performance insights:

For efficiency and speed: If logging speed is your top priority, println is the best choice. It provides the fastest logging times across all file sizes. While it offers the best performance, it's unsuitable for production due to its lack of features like log levels, log rotation, and external integrations

For feature-rich logging: If you require more advanced features, such as different log levels, output formats, or integration with external systems, Log4j and Timbre are great options despite their higher execution time. They are more suitable for production environments where rich logging capabilities are needed.

For small datasets or formatted output: If you need detailed formatting and don’t expect a large volume of logs, pprint is suitable but should be used sparingly for smaller datasets due to its inefficiency with larger volumes.

In conclusion, if logging performance is critical and you don't need advanced features, println is the optimal solution. If you require rich logging features and can tolerate higher execution times, Log4j or Timbre would be better suited.

Environment

Hardware Specifications:

CPU: Intel(R) Core(TM) i5-9300HF CPU @ 2.40GHz - 4 Cores

RAM: 8GB (DDR4)

Operating System:

OS Version: 22.04.1-Ubuntu

Kernel Version: Linux

Java Version: openjdk 17.0.11 2024-04-16 LTS

Clojure Version: Clojure 1.11.1

There's a library in clojure called criterium for benchmarking stuff.

See if that provides any benefit over the homegrown benchmark function.